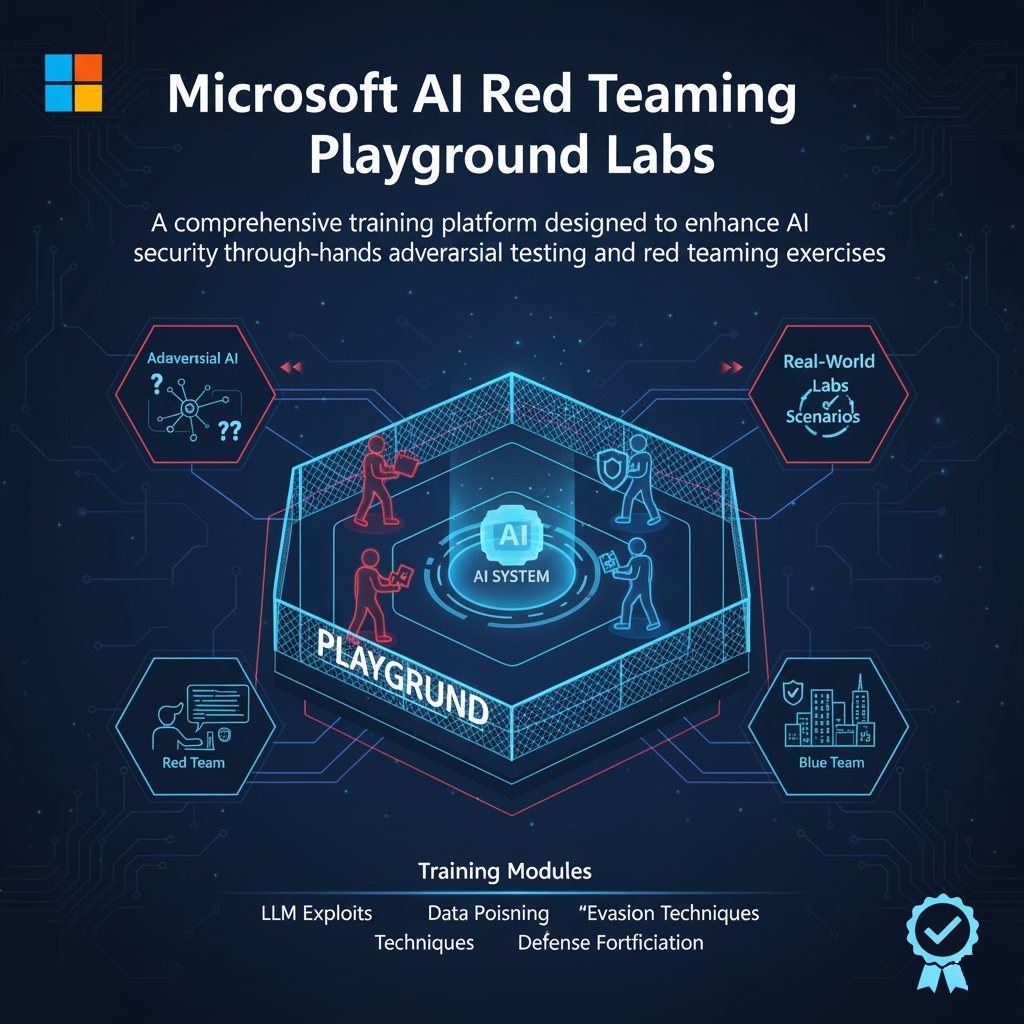

Microsoft AI Red TeamingPlayground Labs

A comprehensive training platform designed to enhance AI security through hands-on adversarial testing and red teaming exercises.

Mission

To empower security researchers, AI practitioners, and organizations with practical tools and scenarios for identifying and mitigating AI vulnerabilities before they can be exploited in production environments.

Why Use These Labs?

Comprehensive features designed for effective AI security training

Technical: Includes containerized AI models, vulnerable applications, and monitoring tools

Technical: Covers prompt injection, data poisoning, model extraction, and adversarial attacks

Technical: Python-based exercises with detailed explanations and code examples

Technical: Beginner, intermediate, and expert challenges with scoring systems

Technical: Architecture diagrams, API references, and troubleshooting guides

Technical: Integration with PyRIT and other red teaming frameworks

What's Included

Complete infrastructure, vulnerable applications, and testing tools

Training Challenges

Progressive exercises from beginner to advanced levels

Learning Objectives

- Understand how LLMs process instructions

- Identify vulnerable prompt patterns

- Execute basic prompt injection attacks

- Document findings and impact

Skills Developed

Learning Objectives

- Understand model memorization

- Craft queries to elicit sensitive information

- Identify PII in model outputs

- Assess data leakage risks

Skills Developed

Learning Objectives

- Study multi-turn conversation exploits

- Implement role-playing attacks

- Use encoding and obfuscation

- Chain multiple techniques

Skills Developed

Learning Objectives

- Design efficient query strategies

- Collect and analyze model responses

- Train a surrogate model

- Evaluate extraction success

Skills Developed

Learning Objectives

- Implement FGSM and PGD attacks

- Generate transferable adversarial examples

- Test robustness of defenses

- Optimize attack efficiency

Skills Developed

Learning Objectives

- Understand model training pipelines

- Design subtle poisoning strategies

- Implement trigger-based backdoors

- Evade detection mechanisms

Skills Developed

Getting Started

Follow these steps to set up your red teaming environment

Ensure you have the required tools and knowledge

- Docker Desktop or Docker Engine installed

- Basic understanding of AI/ML concepts

- Familiarity with command line interfaces

- Python 3.8+ (for notebook exercises)

- Git for cloning the repository

Download the lab materials to your local machine

git clone https://github.com/microsoft/AI-Red-Teaming-Playground-Labs.gitNote: This includes all challenges, notebooks, and infrastructure code

Set up your API keys and configuration

- Copy .env.example to .env

- Add your OpenAI or Azure OpenAI API keys

- Configure model endpoints and parameters

- Review security settings

Launch the vulnerable applications and tools

docker-compose up -dNote: Wait for all services to be healthy before proceeding

Navigate to the lab interface and start learning

Jupyter Notebooks: http://localhost:8888Vulnerable Chatbot: http://localhost:3000Monitoring Dashboard: http://localhost:8080Work through challenges from beginner to advanced

- Start with beginner challenges to understand the environment

- Read the challenge documentation thoroughly

- Document your findings and techniques

- Share learnings with the community

Benefits for Everyone

Value for researchers, practitioners, organizations, and policymakers

- Access to realistic AI vulnerability scenarios

- Platform for testing new attack techniques

- Reproducible environment for research papers

- Community collaboration opportunities

- Hands-on experience with AI security threats

- Practical skills for securing AI systems

- Understanding of attacker methodologies

- Portfolio of red teaming exercises

- Training platform for security teams

- Assessment of AI security posture

- Development of internal security guidelines

- Validation of AI security controls

- Understanding of AI threat landscape

- Evidence-based policy development

- Risk assessment frameworks

- Regulatory compliance insights

Additional Resources

Documentation, tools, and learning materials to enhance your red teaming skills

Contribute

Submit new challenges, improve documentation, or fix bugs

Create a pull request on GitHubShare Findings

Document your discoveries and techniques

Write blog posts or create tutorialsReport Issues

Help improve the labs by reporting bugs or suggesting features

Open an issue on GitHubParticipate in Discussions

Ask questions and share insights with the community

Join GitHub DiscussionsReady to Start Red Teaming?

Clone the repository and begin your journey into AI security testing today