Generative AI Security

As AI systems become capable of creating hyper-realistic images, videos, and audio, the line between authentic and synthetic content blurs. Understanding and defending against these threats is critical for businesses, governments, and individuals.

Explore comprehensive analysis of security challenges in generative AI systems, including deepfake detection, synthetic media authentication, and adversarial attacks on AI-generated content.

Understanding Generative AI Security

Generative AI has revolutionized content creation, but with great power comes significant security challenges. Learn about the technologies, risks, and why this matters for your organization.

Generative AI systems use advanced machine learning models to create new content—images, videos, audio, and text—that can be indistinguishable from human-created content. These systems include:

- GANs (Generative Adversarial Networks): Two neural networks compete to create increasingly realistic content

- Diffusion Models: Systems like Stable Diffusion and DALL-E that generate images from text descriptions

- VAEs (Variational Autoencoders): Models that learn to compress and reconstruct data, enabling content generation

The security landscape encompasses deepfake generation, synthetic media detection, model inversion attacks, adversarial examples, and the broader implications of AI-generated content on information integrity and trust. Organizations face risks ranging from fraud and reputation damage to regulatory compliance and legal liability.

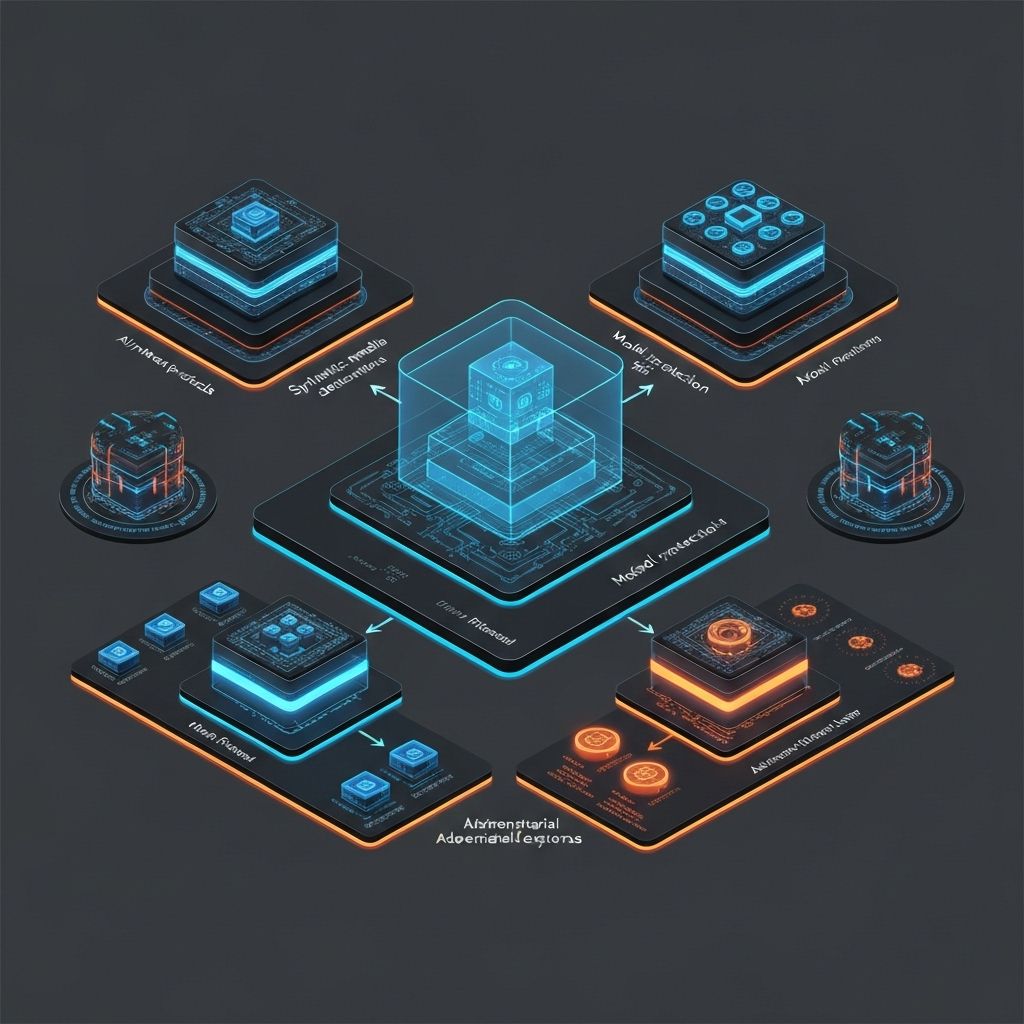

Key Security Domains

- • Deepfake Detection & Prevention

- • Synthetic Media Authentication

- • Model Inversion & Data Extraction

- • Adversarial Content Generation

Affected Technologies

- • Stable Diffusion & DALL-E

- • Face Swap Applications

- • Voice Synthesis Systems

- • Video Generation Models

Detection

Identify synthetic content using AI and forensic techniques before it causes harm

Authentication

Verify content authenticity and provenance using cryptographic methods

Prevention

Implement safeguards against malicious generation at the source

Response

Rapid mitigation of synthetic media threats when detected

AI-Generated Disinformation Campaign

Large-scale synthetic media campaign detected across social platforms targeting multiple countries. See our case studies

Active ThreatCelebrity Deepfake Fraud Ring

Criminal network using AI-generated celebrity content for investment fraud schemes

Under InvestigationThreat Landscape

Understanding the various types of generative AI threats helps organizations prepare appropriate defenses. Each threat category presents unique challenges and requires specific mitigation strategies.

Deepfake Categories

- • Face swap deepfakes: Replace one person's face with another in videos

- • Voice cloning: Synthesize realistic speech in anyone's voice

- • Full body puppeteering: Control entire body movements in videos

- • Real-time generation: Create deepfakes during live video calls

Who's at Risk?

- • Executives: Identity theft and corporate fraud

- • Public figures: Reputation damage and misinformation

- • Financial institutions: Authentication bypass and fraud

- • General public: Non-consensual intimate imagery

Generation Methods

- • Diffusion models: Stable Diffusion, DALL-E for image generation

- • GANs: Create realistic faces, objects, and scenes

- • VAEs: Generate variations of existing content

- • Transformers: Text-to-image and text-to-video generation

Security Concerns

- • Copyright infringement: Unauthorized use of training data

- • Misinformation: Fake news images and videos

- • Brand impersonation: Counterfeit marketing materials

- • Evidence tampering: Manipulated legal or forensic evidence

Attack Vectors

- • Adversarial prompts: Carefully crafted inputs to manipulate outputs

- • Model poisoning: Corrupt training data to influence behavior

- • Backdoor insertion: Hidden triggers that activate malicious behavior

- • Gradient attacks: Exploit model internals to force specific outputs

Potential Consequences

- • Content quality degradation affecting user trust

- • Bias amplification leading to discriminatory outputs

- • Harmful content generation bypassing safety measures

- • Model behavior manipulation for competitive advantage

Extraction Methods

- • Membership inference: Determine if specific data was used in training

- • Data reconstruction: Recreate original training samples

- • Parameter analysis: Extract information from model weights

- • Latent space exploration: Navigate model internals to find data

Privacy Risks

- • Personal data exposure (names, addresses, photos)

- • Proprietary content leakage (trade secrets, designs)

- • Biometric information theft (faces, fingerprints)

- • Intellectual property violation (copyrighted works)

Detection Methods

Effective deepfake detection requires a multi-layered approach combining AI-based analysis, forensic techniques, and authentication systems. Understanding when and how to use each method is crucial for building robust defenses against synthetic media threats.

Detection Models

- • Convolutional Neural Networks (CNNs)

- • Vision Transformers (ViTs)

- • Ensemble classifiers

- • Temporal consistency models

Performance Metrics

- >95% detection accuracy

- • Low false positive rates

- • Real-time processing capabilities

- • Robust cross-dataset generalization

Analysis Techniques

- • Pixel-level inconsistencies

- • Compression artifact analysis

- • Lighting and shadow inconsistencies

- • Facial landmark tracking and anomalies

Forensic Tools

- • Metadata examination (EXIF, XMP)

- • Frequency domain analysis (FFT)

- • Noise pattern detection

- • Temporal coherence checks

Provenance Tracking

- • Content origin verification

- • Immutable audit trails

- • Cryptographic digital signatures

- • Timestamp validation

Implementation Standards

- • C2PA (Coalition for Content Provenance and Authenticity)

- • Content Credentials integration

- • Decentralized verification mechanisms

- • Cross-platform compatibility

Commercial Solutions

Open Source Tools

Mitigation Strategies

Protecting against generative AI threats requires a comprehensive strategy that combines technical controls, policy frameworks, and rapid response capabilities. These approaches work together to prevent, detect, and respond to synthetic media threats.

Technical Controls

- • Content authentication watermarks

- • Biometric liveness detection

- • Multi-factor verification for sensitive actions

- • Real-time monitoring and anomaly detection systems

// Content watermarking example

function addWatermark(content) {

const watermark = generateCryptoWatermark();

return embedInvisibleWatermark(content, watermark);

}Platform Integration

- • Social media platform APIs for content scanning

- • Content management systems (CMS) integration

- • Video streaming platforms' detection pipelines

- • News and media outlet verification workflows

// Detection API integration

async function verifyContent(mediaUrl) {

const result = await deepfakeDetector.analyze(mediaUrl);

return result.confidence > 0.95 ? 'authentic' : 'suspicious';

}Regulatory Measures

- • Deepfake disclosure and labeling requirements

- • Criminal penalties for malicious use

- • Platform liability for hosting harmful synthetic content

- • International cooperation and treaties on AI misuse

Industry Standards

- • C2PA for content provenance and authenticity

- • Project Origin for media authenticity

- • IEEE standards for synthetic media

- • Partnership on AI (PAI) ethical guidelines

Incident Response

- • Rapid content takedown procedures

- • Victim notification and support systems

- • Evidence preservation protocols for legal action

- • Law enforcement and cybersecurity agency coordination

Recovery Measures

- • Reputation management and restoration

- • Counter-narrative development and deployment

- • Legal remediation and compensation assistance

- • Public awareness campaigns

Phase 1: Assessment & Planning

Phase 2: Implementation & Deployment

Phase 3: Optimization & Evolution

Real-World Case Studies

Learning from actual incidents helps organizations understand the real-world impact of generative AI threats and develop effective response strategies. Each case study includes key lessons and actionable takeaways.

A sophisticated disinformation campaign used AI-generated deepfake videos of political candidates to spread false information during the 2024 election cycle. The campaign reached over 50 million viewers before being detected and removed.

Scale & Impact

- • 50M+ video views

- • 200+ deepfake videos

- • 15 countries affected

- • 72-hour detection delay

Technical Analysis

- • High-quality face swap and lip sync

- • Advanced voice cloning technology

- • Automated social media distribution

- • Sophisticated anti-detection measures

Response Measures

- • Platform-wide content removal

- • Enhanced fact-checking initiatives

- • Rapid detection algorithm updates

- • International cooperation and information sharing

Criminal network created deepfake videos of celebrities endorsing fraudulent investment schemes, resulting in over $10 million in losses before the operation was shut down by international law enforcement.

Financial Impact

- • $10M+ in victim losses

- • 5,000+ victims affected

- • 25 celebrity likenesses used

- • 6-month operation duration

Criminal Methods

- • Realistic celebrity face synthesis

- • Sophisticated voice cloning technology

- • Targeted social media advertising

- • Creation of fake investment platforms

Investigation & Resolution

- • Multi-agency international task force

- • Advanced digital forensics analysis

- • Global law enforcement cooperation

- • 12 arrests made, assets seized

Researchers successfully extracted copyrighted images and personal photos from Stable Diffusion's training dataset using novel model inversion techniques. This highlights privacy and IP risks associated with large generative models.

Read StudyComprehensive analysis of generative AI security threats, current detection capabilities, and emerging mitigation strategies across various industries.

View ReportRelated Security Research

Explore related AI security topics and vulnerability analysis