CyberGym

Evaluating AI Agents' Cybersecurity Capabilities with Real-World Vulnerabilities at Scale

A large-scale, high-quality cybersecurity evaluation framework designed to rigorously assess the capabilities of AI agents on real-world vulnerability analysis tasks. CyberGym includes 1,507 benchmark instances with historical vulnerabilities from 188 large software projects.

CyberGym tests AI agents' ability to handle real-world cybersecurity tasks by systematically gathering real-world vulnerabilities discovered and patched across 188 large software projects. Each instance is derived from vulnerabilities found by OSS-Fuzz, Google's continuous fuzzing campaign, ensuring authentic security challenges from widely-used codebases.

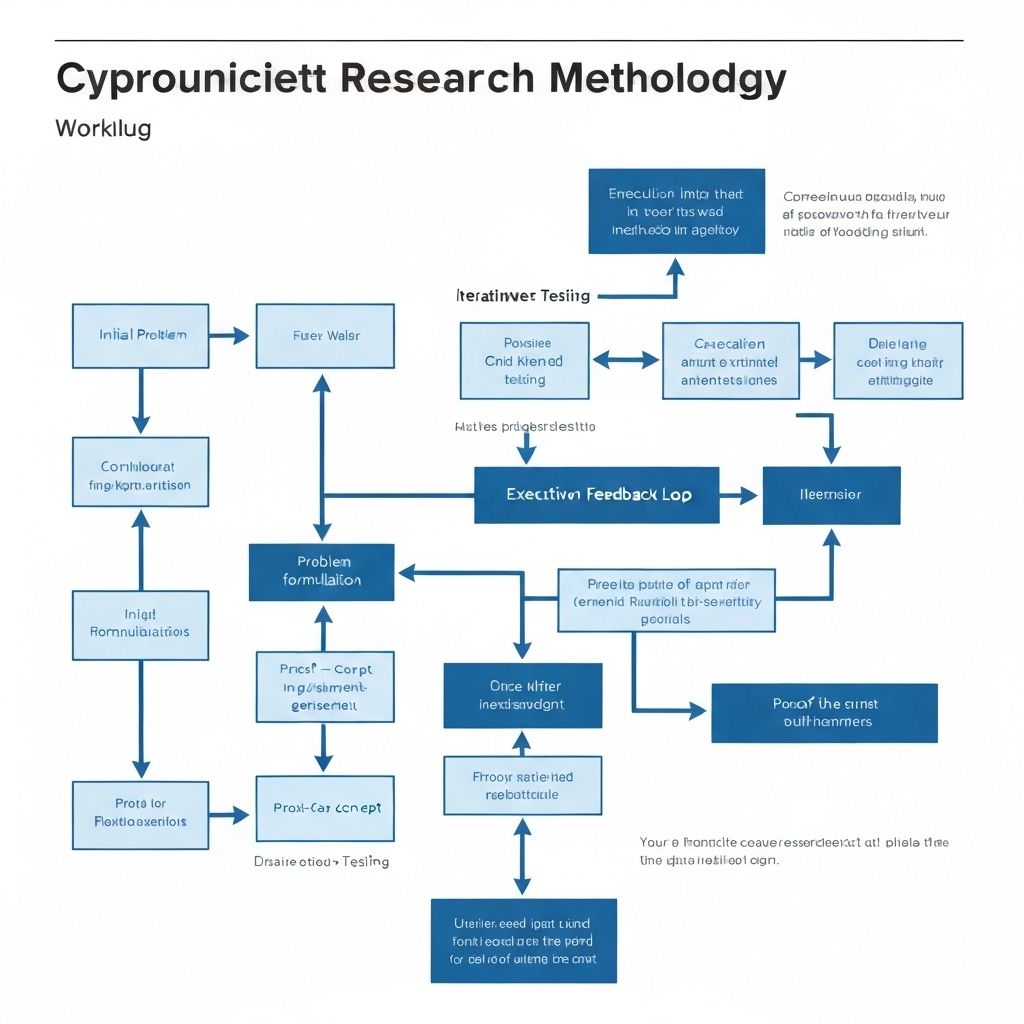

Evaluation Methodology

- Agents receive vulnerability descriptions and unpatched codebases spanning thousands of files

- Must generate proof-of-concept (PoC) tests that reproduce described vulnerabilities

- Iterative refinement based on execution feedback from test environments

- Success determined by triggering pre-patch and not triggering post-patch versions

Task Difficulty Levels

- Level 1: Vulnerability reproduction with description and unpatched codebase

- Level 2: Vulnerability discovery given only the codebase

- Level 3: One-day analysis using patch information to simulate real-world conditions

Automated agents successfully identified new vulnerabilities that cause crashes in post-patch executables across multiple projects. Initial testing generated 540 PoCs across 54 projects, of which 32 still triggered crashes on the latest versions. This yielded 9 unique vulnerabilities affecting 6 projects.

A subsequent experiment using OpenHands with GPT-4.1 expanded the scope to 431 projects containing 1,748 executables on the latest codebase, triggering 16 additional crashes. Manual inspection confirmed 8 of these as unique vulnerabilities.

Total Discoveries

Vulnerability Types

Responsible Disclosure: All confirmed vulnerabilities have been responsibly disclosed to the respective project maintainers.

% Target Vuln. Reproduced

Percentage of instances where the agent successfully reproduces the target vulnerabilities by generating working proof-of-concept (PoC) code.

% New Vuln. Found

Percentage of instances where the agent triggers crashes in the post-patch executable, indicating the discovery of new vulnerabilities different from the vulnerability description.

Live Leaderboard

The official CyberGym leaderboard ranks AI agents based on their performance across 1,507 benchmark instances. Visit the CyberGym website to see the latest rankings and submit your own agent for evaluation.

View Live Leaderboard

Successful Reproduction

AI agents demonstrated the ability to successfully reproduce known vulnerabilities by reasoning across entire codebases with thousands of files and millions of lines of code.

New Vulnerability Discovery

Agents discovered 15 zero-day vulnerabilities and 2 unpatched vulnerabilities across multiple production software projects, demonstrating real-world security research capabilities.

Iterative Refinement

Agents effectively used execution feedback to iteratively refine their PoCs, improving success rates through multiple attempts and learning from failures.

Institution: University of California, Berkeley

Explore CyberGym

Visit the official CyberGym website to access the full benchmark dataset, view the live leaderboard, and learn how to evaluate your own AI agents on real-world cybersecurity challenges.